Difference between revisions of "Robots.txt"

Ralph.ebnet (talk | contribs) (→What role does robots.txt play in search engine optimization?) |

(→Similar articles) |

||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | <seo title="What is a | + | <seo title="What is a Robots.txt File and how do you create it?" metadescription="Robots.txt is a text file with instructions for search engine crawlers. It defines which areas of a website crawlers are allowed to search." /> |

| + | |||

== What is robots.txt? == | == What is robots.txt? == | ||

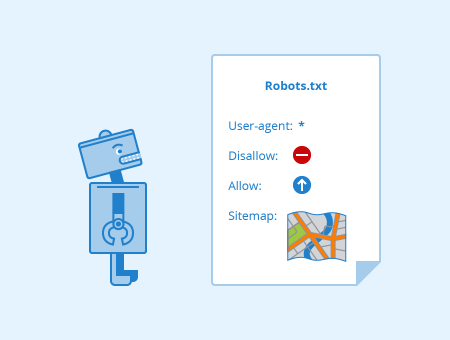

| − | + | [[File:Robots-txt.png|thumb|450px|right|alt=Robots.txt|'''Figure:''' Robots.txt - Author: Seobility - License: [[Creative Commons License BY-SA 4.0|CC BY-SA 4.0]]|link=https://www.seobility.net/en/wiki/images/2/20/Robots-txt.png]] | |

| − | Robots.txt is a text file with instructions for [[Search Engine Crawlers|search engine crawlers]]. It defines which areas of | + | Robots.txt is a text file with instructions for bots (mostly [[Search Engine Crawlers|search engine crawlers]]) trying to access a website. It defines which areas of the site crawlers are allowed or disallowed to access. You can easily exclude entire domains, complete directories, one or more subdirectories, or individual files from search engine crawling using this simple text file. However, this file does not protect against unauthorized access. |

Robots.txt is stored in the root directory of a domain. Thus it is the first document that crawlers open when visiting your site. However, the file does not only control crawling. You can also integrate a link to your sitemap, which gives search engine crawlers an overview of all existing URLs of your domain. | Robots.txt is stored in the root directory of a domain. Thus it is the first document that crawlers open when visiting your site. However, the file does not only control crawling. You can also integrate a link to your sitemap, which gives search engine crawlers an overview of all existing URLs of your domain. | ||

| Line 21: | Line 22: | ||

== How robots.txt works == | == How robots.txt works == | ||

| − | In 1994, a protocol called REP (Robots Exclusion Standard Protocol) was published. This protocol stipulates that all search engine crawlers (user-agents) must first search for the robots.txt file in the root directory of your site and read the instructions it contains. Only then, robots can start indexing your web page. The file must be located directly in the root directory of your domain and must be written in lower case because robots read the robots.txt file and its instructions case-sensitive. Unfortunately, not all search engine robots follow these rules. At least the file works with the most important search engines like Bing, Yahoo, and Google. Their search robots strictly follow the REP and robots.txt instructions. | + | In 1994, a protocol called REP (Robots Exclusion Standard Protocol) was published. This protocol stipulates that all search engine crawlers (user-agents) must first search for the robots.txt file in the root directory of your site and read the instructions it contains. Only then, robots can start indexing your web page. The file must be located directly in the root directory of your domain and must be written in lower case because robots read the robots.txt file and its instructions [[Case Sensitivity|case-sensitive]]. Unfortunately, not all search engine robots follow these rules. At least the file works with the most important search engines like Bing, Yahoo, and Google. Their search robots strictly follow the REP and robots.txt instructions. |

| − | In practice, robots.txt can be used for different types of files. If you use it for image files, it prevents these files from appearing in the Google search results. Unimportant resource files, such as script, style, and image files, can also be blocked easily with robots.txt. In addition, you can exclude dynamically generated web pages from crawling using appropriate commands. For example, result pages of an internal search function, pages with session IDs or user actions such as shopping carts can be blocked. You can also control crawler access to other non-image files (web pages) by using the text file. Thereby, you can avoid the following scenarios: | + | In practice, robots.txt can be used for different types of files. If you use it for image files, it prevents these files from appearing in the Google search results. Unimportant resource files, such as script, style, and image files, can also be blocked easily with robots.txt. In addition, you can exclude dynamically generated web pages from crawling using appropriate commands. For example, result pages of an internal search function, pages with [[Session ID|session IDs]] or user actions such as shopping carts can be blocked. You can also control crawler access to other non-image files (web pages) by using the text file. Thereby, you can avoid the following scenarios: |

* search robots crawl lots of similar or unimportant web pages | * search robots crawl lots of similar or unimportant web pages | ||

| Line 33: | Line 34: | ||

<pre><meta name="robots" content="noindex"></pre> | <pre><meta name="robots" content="noindex"></pre> | ||

| − | However, you should not block files that are of high relevance for search robots. Note that CSS and JavaScript files should also be unblocked, as these are used for crawling especially by mobile robots. | + | However, you should not block files that are of high relevance for search robots. Note that CSS and [[Javascript|JavaScript]] files should also be unblocked, as these are used for crawling especially by mobile robots. |

== Which instructions are used in robots.txt? == | == Which instructions are used in robots.txt? == | ||

| Line 64: | Line 65: | ||

== What role does robots.txt play in search engine optimization? == | == What role does robots.txt play in search engine optimization? == | ||

| − | The instructions in a robots.txt file have a strong influence on SEO (Search Engine Optimization) as the file allows you to control search robots. However, if [[User Agent|user agents]] are restricted too much by disallow instructions, this | + | The instructions in a robots.txt file have a strong influence on SEO (Search Engine Optimization) as the file allows you to control search robots. However, if [[User Agent|user agents]] are restricted too much by disallow instructions, this could have a negative effect on the ranking of your website. You also have to consider that you won’t rank with web pages you have excluded by disallow in robots.txt. |

Before you save the file in the root directory of your website, you should check the syntax. Even minor errors can lead to search bots disregarding the disallow rules and crawling websites that should not be indexed. Such errors can also result in pages no longer being accessible for search bots and entire URLs not being indexed because of disallow. You can check the correctness of your robots.txt using [[Google Search Console]]. Under "Current Status" and "Crawl Errors", you will find all pages blocked by the disallow instructions. | Before you save the file in the root directory of your website, you should check the syntax. Even minor errors can lead to search bots disregarding the disallow rules and crawling websites that should not be indexed. Such errors can also result in pages no longer being accessible for search bots and entire URLs not being indexed because of disallow. You can check the correctness of your robots.txt using [[Google Search Console]]. Under "Current Status" and "Crawl Errors", you will find all pages blocked by the disallow instructions. | ||

| − | By using robots.txt correctly you can ensure that all important parts of your website are crawled by search bots. Consequently, your | + | By using robots.txt correctly you can ensure that all important parts of your website are crawled by search bots. Consequently, your important page content can get indexed by Google and other search engines. |

== Related links == | == Related links == | ||

| Line 82: | Line 83: | ||

[[Category:Search Engine Optimization]] | [[Category:Search Engine Optimization]] | ||

[[Category:Web Development]] | [[Category:Web Development]] | ||

| + | |||

| + | <html><script type="application/ld+json"> | ||

| + | { | ||

| + | "@context": "https://schema.org/", | ||

| + | "@type": "ImageObject", | ||

| + | "contentUrl": "https://www.seobility.net/en/wiki/images/2/20/Robots-txt.png", | ||

| + | "license": "https://creativecommons.org/licenses/by-sa/4.0/", | ||

| + | "acquireLicensePage": "https://www.seobility.net/en/wiki/Creative_Commons_License_BY-SA_4.0" | ||

| + | } | ||

| + | </script></html> | ||

| + | |||

| + | {| class="wikitable" style="text-align:left" | ||

| + | |- | ||

| + | |'''About the author''' | ||

| + | |- | ||

| + | | [[File:Seobility S.jpg|link=|100px|left|alt=Seobility S]] The Seobility Wiki team consists of seasoned SEOs, digital marketing professionals, and business experts with combined hands-on experience in SEO, online marketing and web development. All our articles went through a multi-level editorial process to provide you with the best possible quality and truly helpful information. Learn more about <html><a href="https://www.seobility.net/en/wiki/Seobility_Wiki_Team" target="_blank">the people behind the Seobility Wiki</a></html>. | ||

| + | |} | ||

| + | |||

| + | <html><script type="application/ld+json"> | ||

| + | { | ||

| + | "@context": "https://schema.org", | ||

| + | "@type": "Article", | ||

| + | "author": { | ||

| + | "@type": "Organization", | ||

| + | "name": "Seobility", | ||

| + | "url": "https://www.seobility.net/" | ||

| + | } | ||

| + | } | ||

| + | </script></html> | ||

Latest revision as of 17:12, 6 December 2023

Contents

What is robots.txt?

Robots.txt is a text file with instructions for bots (mostly search engine crawlers) trying to access a website. It defines which areas of the site crawlers are allowed or disallowed to access. You can easily exclude entire domains, complete directories, one or more subdirectories, or individual files from search engine crawling using this simple text file. However, this file does not protect against unauthorized access.

Robots.txt is stored in the root directory of a domain. Thus it is the first document that crawlers open when visiting your site. However, the file does not only control crawling. You can also integrate a link to your sitemap, which gives search engine crawlers an overview of all existing URLs of your domain.

Robots.txt Checker

Check the robots.txt file of your website

How robots.txt works

In 1994, a protocol called REP (Robots Exclusion Standard Protocol) was published. This protocol stipulates that all search engine crawlers (user-agents) must first search for the robots.txt file in the root directory of your site and read the instructions it contains. Only then, robots can start indexing your web page. The file must be located directly in the root directory of your domain and must be written in lower case because robots read the robots.txt file and its instructions case-sensitive. Unfortunately, not all search engine robots follow these rules. At least the file works with the most important search engines like Bing, Yahoo, and Google. Their search robots strictly follow the REP and robots.txt instructions.

In practice, robots.txt can be used for different types of files. If you use it for image files, it prevents these files from appearing in the Google search results. Unimportant resource files, such as script, style, and image files, can also be blocked easily with robots.txt. In addition, you can exclude dynamically generated web pages from crawling using appropriate commands. For example, result pages of an internal search function, pages with session IDs or user actions such as shopping carts can be blocked. You can also control crawler access to other non-image files (web pages) by using the text file. Thereby, you can avoid the following scenarios:

- search robots crawl lots of similar or unimportant web pages

- your crawl budget is wasted unnecessarily

- your server is overloaded by crawlers

In this context, however, note that robots.txt does not guarantee that your site or individual sub-pages are not indexed. It only controls the crawling of your website, but not the indexation. If web pages are not to be indexed by search engines, you have to set the following meta tag in the header of your web page:

<meta name="robots" content="noindex">

However, you should not block files that are of high relevance for search robots. Note that CSS and JavaScript files should also be unblocked, as these are used for crawling especially by mobile robots.

Which instructions are used in robots.txt?

Your robots.txt has to be saved as a UTF-8 or ASCII text file in the root directory of your web page. There must be only one file with this name. It contains one or more rule sets structured in a clearly readable format. The rules (instructions) are processed from top to bottom whereby upper and lower case letters are distinguished.

The following terms are used in a robots.txt file:

- user-agent: denotes the name of the crawler (the names can be found in the Robots Database)

- disallow: prevents crawling of certain files, directories or web pages

- allow: overwrites disallow and allows crawling of files, web pages, and directories

- sitemap (optional): shows the location of the sitemap

- *: stands for any number of character

- $: stands for the end of the line

The instructions (entries) in robots.txt always consist of two parts. In the first part, you define which robots (user-agents) the following instruction apply for. The second part contains the instruction (disallow or allow). "user-agent: Google-Bot" and the instruction "disallow: /clients/" mean that Google bot is not allowed to search the directory /clients/. If the entire website is not to be crawled by a search bot, the entry is: "user-agent: *" with the instruction "disallow: /". You can use the dollar sign "$" to block web pages that have a certain extension. The statement "disallow: /* .doc$" blocks all URLs with a .doc extension. In the same way, you can block specific file formats n robots.txt: "disallow: /*.jpg$".

For example, the robots.txt file for the website https://www.example.com/ could look like this:

User-agent: * Disallow: /login/ Disallow: /card/ Disallow: /fotos/ Disallow: /temp/ Disallow: /search/ Disallow: /*.pdf$ Sitemap: https://www.example.com/sitemap.xml

What role does robots.txt play in search engine optimization?

The instructions in a robots.txt file have a strong influence on SEO (Search Engine Optimization) as the file allows you to control search robots. However, if user agents are restricted too much by disallow instructions, this could have a negative effect on the ranking of your website. You also have to consider that you won’t rank with web pages you have excluded by disallow in robots.txt.

Before you save the file in the root directory of your website, you should check the syntax. Even minor errors can lead to search bots disregarding the disallow rules and crawling websites that should not be indexed. Such errors can also result in pages no longer being accessible for search bots and entire URLs not being indexed because of disallow. You can check the correctness of your robots.txt using Google Search Console. Under "Current Status" and "Crawl Errors", you will find all pages blocked by the disallow instructions.

By using robots.txt correctly you can ensure that all important parts of your website are crawled by search bots. Consequently, your important page content can get indexed by Google and other search engines.

Related links

- https://support.google.com/webmasters/answer/6062608?hl=en

- https://support.google.com/webmasters/answer/6062596?hl=en

Similar articles

| About the author |

|