Difference between revisions of "GET Parameters"

(→GET parameters and duplicate content) |

(→Similar articles) |

||

| (31 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | <seo title="GET Parameters: Definition" metadescription=" | + | <seo title="GET Parameters: Definition" metadescription="GET parameters are used when a client, such as a browser, requests a particular resource from a web server using the HTTP protocol." /> |

== Definition == | == Definition == | ||

| + | [[File:GET-Parameters.png|thumb|450px|right|alt=GET Parameters|'''Figure:''' GET Parameters - Author: Seobility - License: [[Creative Commons License BY-SA 4.0|CC BY-SA 4.0]]|link=https://www.seobility.net/en/wiki/images/4/49/GET-Parameters.png]] | ||

| − | GET parameters (also called URL parameters) are used when a client, such as a browser, requests a particular resource from a web server using the HTTP protocol | + | GET parameters (also called URL parameters or query strings) are used when a client, such as a browser, requests a particular resource from a web server using the HTTP protocol. |

| − | = | + | These parameters are usually name-value pairs, separated by an equals sign <code>=</code>. They can be used for a variety of things, as explained below. |

| − | + | == What do URL parameters look like? == | |

| − | + | An example URL could look like this: | |

| − | + | <pre>https://www.example.com/index.html?name1=value1&name2=value2</pre> | |

| − | < | + | GET parameters always start with a question mark <code>?</code>. This is followed by the name of the variable and the corresponding value, separated by an <code>=</code>. If an URL contains more than one parameter, they are separated by an Ampersand <code>&</code>. |

| − | + | [[File:Get-parameter-zaful.png|border|link=|650px|alt=GET Parameter|Screenshot showing what GET parameters look like]] | |

| − | + | Screenshot of [https://www.zaful.com/men-e_118/?odr=new-arrivals Zaful.com] showing GET parameters. | |

| − | + | == Using Get Parameters == | |

| − | + | GET parameters can be divided into <strong>active and passive</strong>. Active parameters modify the content of a page, for example, by: | |

| − | + | * '''Filtering content:''' <code>?type=green</code> displays only green products on an e-commerce site. | |

| + | * '''Sorting contents:''' <code>?sort=price_ascending</code> sorts the displayed products by price, in this case ascending. | ||

| − | + | Passive GET parameters, on the other hand, do not change a page's content and are primarily used to collect user data. Application examples are among others: | |

| − | + | * '''Tracking of [[Session ID]]s:''' ?sessionid=12345 This allows visits of individual users to be saved if cookies were rejected. | |

| + | * '''Tracking of [[Website Traffic|website traffic]]:'''<code> ?utm_source=google</code> URL parameters can be used to track where your website visitors came from. These UTM (Urchin Tracking Module) parameters work with analytics tools and can help evaluate the success of a campaign. Besides <code>source</code>, there are <code>utm_medium</code>, <code>utm_campaign</code>, <code>utm_term</code>, and <code>utm_content</code>. More information can be found at Google's [https://ga-dev-tools.appspot.com/campaign-url-builder/ Campaign URL Builder]. | ||

| − | == GET parameters and | + | == Potential problems related to GET parameters == |

| + | |||

| + | Too many subpages with URL parameters can negatively impact a website's rankings. The most common problems regarding GET parameters are <strong>duplicate content, wasted crawl budget, and illegible URLs.</strong> | ||

| + | |||

| + | === Duplicate content === | ||

Generating GET parameters, for example, based on website filter settings, can cause serious problems with [[Duplicate Content|duplicate content]] on e-commerce sites. When shop visitors can use filters to sort or narrow the content on a page, additional URLs are generated. This happens even though the content of the pages does not necessarily differ. The following example illustrates this problem: | Generating GET parameters, for example, based on website filter settings, can cause serious problems with [[Duplicate Content|duplicate content]] on e-commerce sites. When shop visitors can use filters to sort or narrow the content on a page, additional URLs are generated. This happens even though the content of the pages does not necessarily differ. The following example illustrates this problem: | ||

<div style="border-style:solid; border-width:1px;border-color:rgb(170, 170, 170);padding:10px;"> | <div style="border-style:solid; border-width:1px;border-color:rgb(170, 170, 170);padding:10px;"> | ||

| − | If the URL of an e-commerce page contains the complete list of all existing products in ascending alphabetical order and the user sorts the products in descending order, the URL for the new sort is /all-products.html?sort=Z-A. In this case, the new URL has the same content as the original page, but in a different order. This is problematic because Google could consider this duplicate content. The consequence is that Google cannot determine the page to be displayed in the search results. Another problem is that Google does not know which ranking signals should be assigned to each URL, which divides the website’s ranking properties between the two subpages. | + | If the URL of an e-commerce page contains the complete list of all existing products in ascending alphabetical order and the user sorts the products in descending order, the URL for the new sort is /all-products.html?sort=Z-A. In this case, the new URL has the same content as the original page, but in a different order. This is problematic because Google could consider this duplicate content. The consequence is that Google cannot determine the page to be displayed in the search results. Another problem is that Google does not know which ranking signals should be assigned to each URL, which divides the website’s ranking properties between the two subpages.</div> |

| − | </div> | ||

One solution to this problem is to uniquely define the relationship between the pages using [[Canonical Tag|canonical tags]]. | One solution to this problem is to uniquely define the relationship between the pages using [[Canonical Tag|canonical tags]]. | ||

| Line 47: | Line 53: | ||

Canonical tags are therefore a simple solution to guide [[Search Engine Crawlers|search engine crawlers]] to the content they are supposed to index. | Canonical tags are therefore a simple solution to guide [[Search Engine Crawlers|search engine crawlers]] to the content they are supposed to index. | ||

| − | + | === Waste of crawl budget === | |

| + | |||

| + | Google crawls a limited number of URLs per website. This amount of URLs is called crawl budget. More information can be found on the [https://www.seobility.net/en/blog/crawl-budget-optimization/ Seobility Blog]. | ||

| + | |||

| + | If a website has many crawlable URLs due to the use of URL parameters, Googlebot might spend the crawl budget for the wrong pages. One method to prevent this problem is the [[Robots.txt|robots.txt]]. This can be used to specify that the Googlebot is not supposed to crawl URLs with certain parameters. | ||

| − | + | === Illegible URLs === | |

| + | |||

| + | Too many parameters within a URL can make it difficult for users to read and remember the URL. Worst case, this can damage [[Usability|usability]] and [[CTR (Click-Through Rate)|click-through rates]]. | ||

| + | |||

| + | Generally, duplicate content, as well as problems with crawl budget, can be at least partially prevented by avoiding unnecessary parameters in a URL. | ||

| + | |||

| + | == Related links == | ||

| + | |||

| + | * https://support.google.com/google-ads/answer/6277564?hl=en | ||

== Similar articles == | == Similar articles == | ||

| Line 57: | Line 75: | ||

[[Category:Web Development]] | [[Category:Web Development]] | ||

[[Category:Search Engine Optimization]] | [[Category:Search Engine Optimization]] | ||

| + | |||

| + | <html><script type="application/ld+json"> | ||

| + | { | ||

| + | "@context": "https://schema.org/", | ||

| + | "@type": "ImageObject", | ||

| + | "contentUrl": "https://www.seobility.net/en/wiki/images/4/49/GET-Parameters.png", | ||

| + | "license": "https://creativecommons.org/licenses/by-sa/4.0/", | ||

| + | "acquireLicensePage": "https://www.seobility.net/en/wiki/Creative_Commons_License_BY-SA_4.0" | ||

| + | } | ||

| + | </script></html> | ||

| + | |||

| + | {| class="wikitable" style="text-align:left" | ||

| + | |- | ||

| + | |'''About the author''' | ||

| + | |- | ||

| + | | [[File:Seobility S.jpg|link=|100px|left|alt=Seobility S]] The Seobility Wiki team consists of seasoned SEOs, digital marketing professionals, and business experts with combined hands-on experience in SEO, online marketing and web development. All our articles went through a multi-level editorial process to provide you with the best possible quality and truly helpful information. Learn more about <html><a href="https://www.seobility.net/en/wiki/Seobility_Wiki_Team" target="_blank">the people behind the Seobility Wiki</a></html>. | ||

| + | |} | ||

| + | |||

| + | <html><script type="application/ld+json"> | ||

| + | { | ||

| + | "@context": "https://schema.org", | ||

| + | "@type": "Article", | ||

| + | "author": { | ||

| + | "@type": "Organization", | ||

| + | "name": "Seobility", | ||

| + | "url": "https://www.seobility.net/" | ||

| + | } | ||

| + | } | ||

| + | </script></html> | ||

Latest revision as of 18:27, 4 December 2023

Contents

Definition

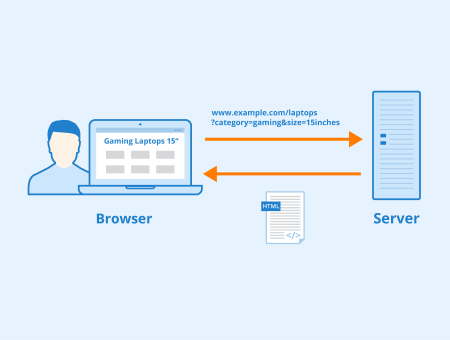

GET parameters (also called URL parameters or query strings) are used when a client, such as a browser, requests a particular resource from a web server using the HTTP protocol.

These parameters are usually name-value pairs, separated by an equals sign =. They can be used for a variety of things, as explained below.

What do URL parameters look like?

An example URL could look like this:

https://www.example.com/index.html?name1=value1&name2=value2

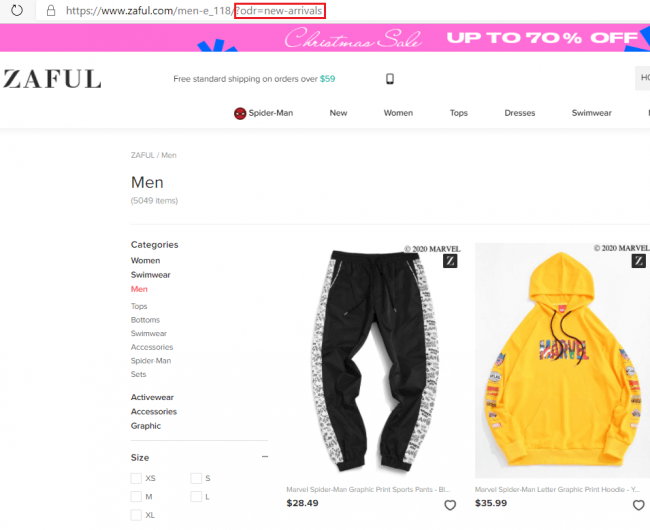

GET parameters always start with a question mark ?. This is followed by the name of the variable and the corresponding value, separated by an =. If an URL contains more than one parameter, they are separated by an Ampersand &.

Screenshot of Zaful.com showing GET parameters.

Using Get Parameters

GET parameters can be divided into active and passive. Active parameters modify the content of a page, for example, by:

- Filtering content:

?type=greendisplays only green products on an e-commerce site. - Sorting contents:

?sort=price_ascendingsorts the displayed products by price, in this case ascending.

Passive GET parameters, on the other hand, do not change a page's content and are primarily used to collect user data. Application examples are among others:

- Tracking of Session IDs: ?sessionid=12345 This allows visits of individual users to be saved if cookies were rejected.

- Tracking of website traffic:

?utm_source=googleURL parameters can be used to track where your website visitors came from. These UTM (Urchin Tracking Module) parameters work with analytics tools and can help evaluate the success of a campaign. Besidessource, there areutm_medium,utm_campaign,utm_term, andutm_content. More information can be found at Google's Campaign URL Builder.

Too many subpages with URL parameters can negatively impact a website's rankings. The most common problems regarding GET parameters are duplicate content, wasted crawl budget, and illegible URLs.

Duplicate content

Generating GET parameters, for example, based on website filter settings, can cause serious problems with duplicate content on e-commerce sites. When shop visitors can use filters to sort or narrow the content on a page, additional URLs are generated. This happens even though the content of the pages does not necessarily differ. The following example illustrates this problem:

One solution to this problem is to uniquely define the relationship between the pages using canonical tags.

Canonical tags indicate to search engines that certain pages should be treated as copies of a particular URL and all ranking properties should be credited to the canonical URL. A canonical tag can be inserted in the <head> area of the HTML document or alternatively in the HTTP header of the web page. If the canonical tag is implemented in the <head> area, the syntax is, for example:

<link rel="canonical" href="https://www.example.com/all-products.html"/>

If this link is added to all URLs that can result from different combinations of filters, the link equity of all these subpages is consolidated on the canonical URL and Google knows which page should be displayed in the SERPs.

Canonical tags are therefore a simple solution to guide search engine crawlers to the content they are supposed to index.

Waste of crawl budget

Google crawls a limited number of URLs per website. This amount of URLs is called crawl budget. More information can be found on the Seobility Blog.

If a website has many crawlable URLs due to the use of URL parameters, Googlebot might spend the crawl budget for the wrong pages. One method to prevent this problem is the robots.txt. This can be used to specify that the Googlebot is not supposed to crawl URLs with certain parameters.

Illegible URLs

Too many parameters within a URL can make it difficult for users to read and remember the URL. Worst case, this can damage usability and click-through rates.

Generally, duplicate content, as well as problems with crawl budget, can be at least partially prevented by avoiding unnecessary parameters in a URL.

Related links

Similar articles

| About the author |

|