Noindex

Contents

What is noindex?

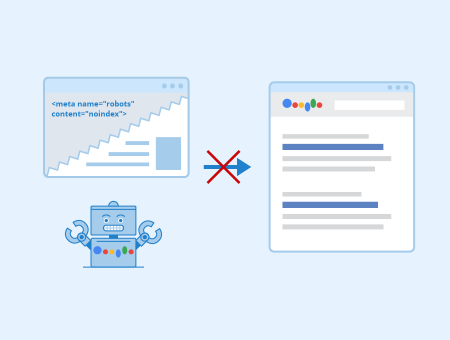

Noindex is a value that can be used in the robots meta tag in the HTML code of a website. It addresses crawlers of search engines like Google, Bing, and Yahoo. If they find this value in the meta tags of a web page, this page is not included in the index of search engines and is therefore not displayed to users in search result lists. The counterpart to noindex is “index” which explicitly allows indexing.

With noindex, you can decide whether a certain web page should be included in the index of search engines or not. Therefore, noindex is a great means to control the indexing of each individual subpage with little effort. Therefore, the directive is an important instrument of search engine optimization (SEO). Google always adheres to a noindex directive whereas index is only seen as a recommendation.

What is the directive used for and when does it (not) make sense?

With the help of noindex, you can exclude pages from the index of search engines that would provide users with little or no added value if they were shown in search results. This includes, for example, sitemaps or the results of an internal search function. Subpages with sensitive data or password-protected download and member areas can also be excluded from indexing this way.

For pages with duplicate, similar, or paginated content, indexing is also not always recommended with regard to SEO. By excluding this content, Google does not evaluate such pages as duplicate content and thus the individual sub-pages do not compete with each other with regard to ranking. This can be useful, for example, in the categories of online shops. If the products there can be sorted according to different criteria, overlaps can occur. For example, if users can sort a rather low number of items by size, products that are available in several sizes will appear in several category displays. If these pages are excluded from indexing, the website does not suffer from duplication, which is great for SEO. In this context, however, note that the relevance of these pages, which may result from backlinks, etc., is also lost due to the noindex directive. Therefore, you should always use a canonical tag for duplicate content, since this tag consolidates the link equity of the affected pages onto the canonical URL and simultaneously signals to Googlebot which page is to be included in the index.

Another frequently occurring use case of noindex is pagination which is often used for long texts or image series. Here it may make sense to index only the first page so that users do not end up in the middle of the content of a picture series, but at the beginning. However, the use of noindex in this context does not always make sense. Especially long editorial articles do not only contain useful information on the first page. Excluding the following pages can, therefore, result in traffic losses, which can have a negative effect on SEO. To avoid this, you should use rel="next"/"prev" for pagination.

How to implement noindex

One possibility to exclude a certain web page from indexing is to add noindex to the robots meta tag in the metadata of that page. This meta tag contains instructions for crawlers of Google and other search engines. To exclude a page from the search engine index, you have to integrate the following tag into the head are of the HTML code:

<meta name="robots" content="noindex">

Instead of rejecting all crawlers, you can also address a specific search engine with this meta tag. For example, if you want to prevent Googlebot from indexing a certain subpage, replace the value in the name attribute with "googlebot". The name of Yahoo’s bot is "slurp". In SEO practice, however, it hardly makes sense to only exclude individual bots.

Alternatively, you can implement the noindex directive via a field in the HTTP response header. For this, you have to add the following code to your HTTP response header:

X-Robots-Tag: noindex

Combining noindex with follow or nofollow

Optionally, you can combine the noindex directive with the values “follow” or “nofollow”. These tell search bots how to handle links on the non-indexed page. Search bots follow the links on the respective page if a follow directive has been set. This combination of directives is often used for HTML sitemaps. Indexing an HTML sitemap rarely makes sense, but from an SEO point of view, the sitemap is valuable because Google and other search engines can access all subpages of a website in just a few steps.

The follow directive can also be useful for SEO with regard to paginated category pages or result pages of the internal search function of online shops. Many website operators decide not to index such subpages because of their low information content and potential duplicate content issues. In this case, the value "follow" is useful to ensure that search engines can still find and index the individual products offered in those categories.

In this context, however, note that Google stops following links on a noindex page after a certain period of time. Therefore, this approach is only partly recommended for long-term SEO.

In contrast to follow, the directive nofollow ensures that crawlers do not assess links on a subpage.

Difference to the directive "disallow" in a robots.txt file

Using the disallow directive in a robots.txt file, you can tell search bots not to crawl the areas defined there. The command is used, for example, for files such as images in large databases, in order to save valuable SEO crawl budget. However, you should not use this directive if you want certain content not to be indexed. While the command prohibits crawling a particular page, this page can still appear in the index if backlinks from other websites point to it.

For this reason, you should never combine noindex and disallow on the same page. Since crawlers always call up the robots.txt file first when indexing a website, they notice the disallow directive first and consequently refrain from crawling the respective subpages. The consequence of this is that they cannot see possible noindex directives on these pages and still include the subpages in the index if they are linked via backlinks. If you don’t want a certain page to be included in the index of search engines, you should therefore only use noindex.

Related links

- https://support.google.com/webmasters/answer/93710?hl=en

- https://blog.hubspot.com/marketing/how-to-unindex-pages-from-search-engines

Similar articles

| About the author |

|